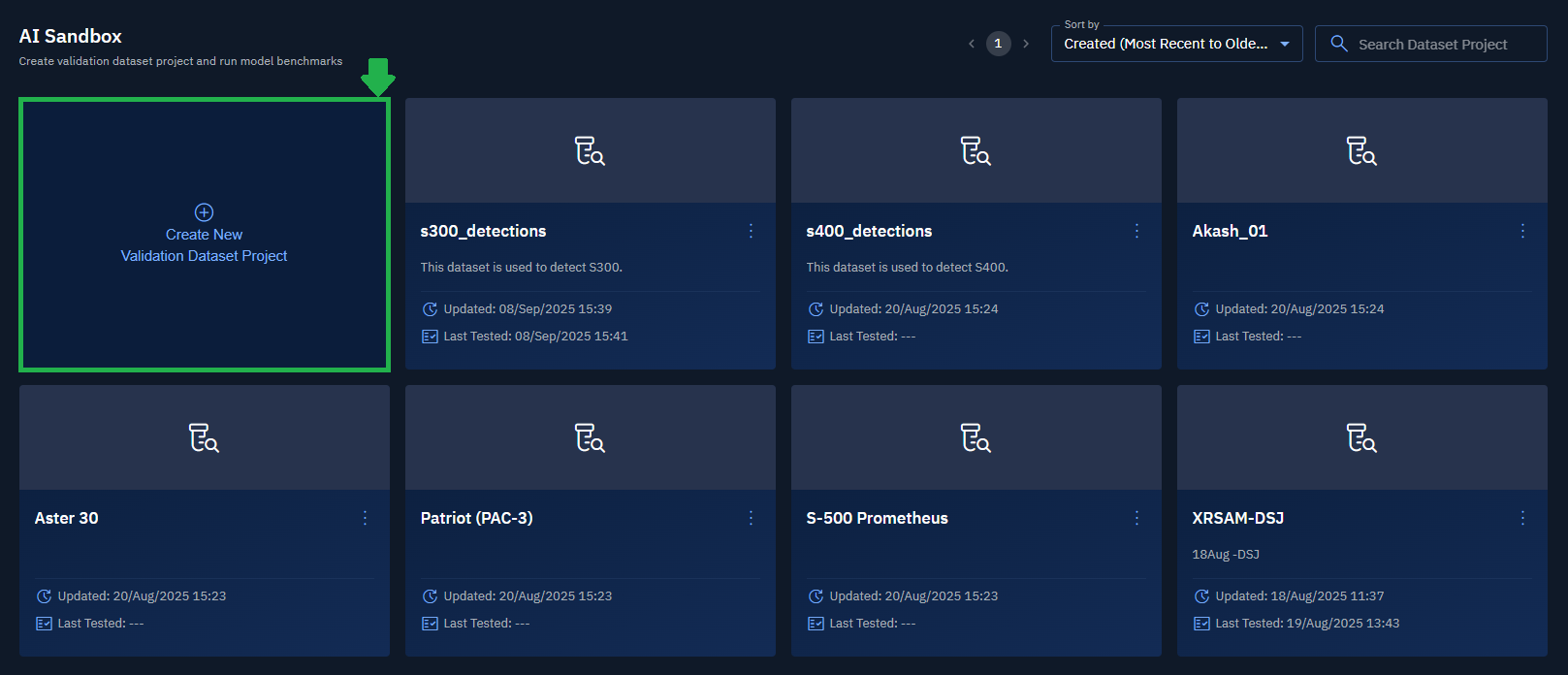

Overview: AI Sandbox

AI Sandbox is an interactive and secure space where you can test and validate AI models using curated datasets and view detailed reports of the tests.

The sandbox is designed to help AI developers, data scientists, and analysts measure the performance of models before deploying them into production. The environment supports external models as well as Auto Model Development (AMD) models built within the platform.

What can you do with AI Sandbox?

You can use AI Sandbox to:

- Create and manage validation dataset projects from scratch, VDP, or annotation layers.

- Commit stable versions of datasets to ensure consistency during evaluation.

- Select and test models against real annotated data.

- View detailed test reports to understand model performance.

- Improve model quality through iterative validation.

C:\Users\vijayendra.darode\vvd-giq-frontend\frontend\apps\giq-docs\docs\07.1-AI\03_AI Sandbox\02_Preparing_Dataset_Project

Typical Validation Workflow

You can validate a model in three main steps:

-

Create a Validation Dataset Project: Start from scratch, import a VDP, or use an annotation project with layered features. Once confirmed, commit the dataset (without committing the dataset, you cannot go to the next step. also, once you commit the dataset, you cannot add annotations dataset or layers

-

Search and Select a Model: Choose an external or AMD model and select the right version for testing.

-

Run the Test and View Report: Launch the test, monitor progress, and access a detailed performance report.

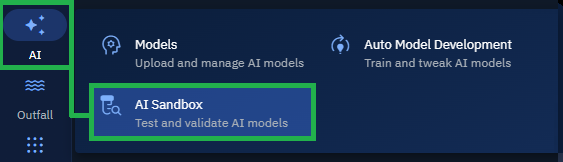

Accessing AI Sandbox

In this section, you will get to know how to access the AI Sandbox module.

To access AI Sandbox, do the following:

-

Log in to the platform.

-

Click the AI module, and then click the AI Sandbox sub-module.

The AI Sandbox dashboard is displayed.